---

language:

- en

- multilingual

- ar

- bg

- ca

- cs

- da

- de

- el

- es

- et

- fa

- fi

- fr

- gl

- gu

- he

- hi

- hu

- hy

- id

- it

- ja

- ka

- ko

- ku

- lt

- lv

- mk

- mn

- mr

- ms

- my

- nb

- nl

- pl

- pt

- ro

- ru

- sk

- sl

- sq

- sr

- sv

- th

- tr

- uk

- ur

- vi

- zh

- hr

license: apache-2.0

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:62698210

- loss:MatryoshkaLoss

- loss:MultipleNegativesRankingLoss

widget:

- source_sentence: A man is jumping unto his filthy bed.

sentences:

- A man is ouside near the beach.

- The bed is dirty.

- The man is on the moon.

- source_sentence: Ship Simulator (video game)

sentences:

- ಯಂತ್ರ ಕಲಿಕೆ

- Ship Simulator

- جان بابتيست لويس بيير

- source_sentence: And so was the title of his book on the Israeli massacre of Gaza

in 2008-2009.

sentences:

- Antony Lowenstein ist ein bekannter Blogger über den Nahen Osten.

- Y ese fue el título de su libro sobre la masacre israelí de Gaza entre 2008 y

2009.

- 'C''était au temps où vous ne pouviez pas avoir un film de Nollywood qui n''incluait

pas un ou une combinaison des aspects suivants: fraude, gris-gris/sorcellerie,

vol à main armée, inceste, adultère, cannibalisme et, naturellement notre sujet

favori, la corruption.'

- source_sentence: In fact, it contributes more than 12 percent to Thailand’s GDP.

sentences:

- Einige Provider folgten der Anordnung, aber „Fitna“ konnte noch über andere Anbieter

angesehen werden.

- En fait, il représente plus de 12% du produit national brut thaïlandais.

- '"Aber von heute an...heute ist der Anfang eines neuen Lebens für mich."'

- source_sentence: It is known for its dry red chili powder .

sentences:

- These monsters will move in large groups .

- It is popular for dry red chili powder .

- In a statistical overview derived from writings by and about William George Aston

, OCLC/WorldCat includes roughly 90 + works in 200 + publications in 4 languages

and 3,000 + library holdings .

datasets:

- sentence-transformers/parallel-sentences-wikititles

- sentence-transformers/parallel-sentences-tatoeba

- sentence-transformers/parallel-sentences-talks

- sentence-transformers/parallel-sentences-europarl

- sentence-transformers/parallel-sentences-global-voices

- sentence-transformers/parallel-sentences-muse

- sentence-transformers/parallel-sentences-wikimatrix

- sentence-transformers/parallel-sentences-opensubtitles

- sentence-transformers/stackexchange-duplicates

- sentence-transformers/quora-duplicates

- sentence-transformers/wikianswers-duplicates

- sentence-transformers/all-nli

- sentence-transformers/simple-wiki

- sentence-transformers/altlex

- sentence-transformers/flickr30k-captions

- sentence-transformers/coco-captions

- sentence-transformers/nli-for-simcse

- jinaai/negation-dataset

pipeline_tag: sentence-similarity

library_name: sentence-transformers

co2_eq_emissions:

emissions: 196.7083299812303

energy_consumed: 0.5060646201491896

source: codecarbon

training_type: fine-tuning

on_cloud: false

cpu_model: 13th Gen Intel(R) Core(TM) i7-13700K

ram_total_size: 31.777088165283203

hours_used: 3.163

hardware_used: 1 x NVIDIA GeForce RTX 3090

---

# Static Embeddings with BERT Multilingual uncased tokenizer finetuned on various datasets

This is a [sentence-transformers](https://www.SBERT.net) model trained on the [wikititles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles), [tatoeba](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba), [talks](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks), [europarl](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl), [global_voices](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices), [muse](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse), [wikimatrix](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix), [opensubtitles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles), [stackexchange](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates), [quora](https://huggingface.co/datasets/sentence-transformers/quora-duplicates), [wikianswers_duplicates](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates), [all_nli](https://huggingface.co/datasets/sentence-transformers/all-nli), [simple_wiki](https://huggingface.co/datasets/sentence-transformers/simple-wiki), [altlex](https://huggingface.co/datasets/sentence-transformers/altlex), [flickr30k_captions](https://huggingface.co/datasets/sentence-transformers/flickr30k-captions), [coco_captions](https://huggingface.co/datasets/sentence-transformers/coco-captions), [nli_for_simcse](https://huggingface.co/datasets/sentence-transformers/nli-for-simcse) and [negation](https://huggingface.co/datasets/jinaai/negation-dataset) datasets. It maps sentences & paragraphs to a 1024-dimensional dense vector space and can be used for semantic textual similarity, paraphrase mining, text classification, clustering, and more.

Read our [Static Embeddings blogpost](https://huggingface.co/blog/static-embeddings) to learn more about this model and how it was trained.

* **0 Active Parameters:** This model does not use any active parameters, instead consisting exclusively of averaging pre-computed token embeddings.

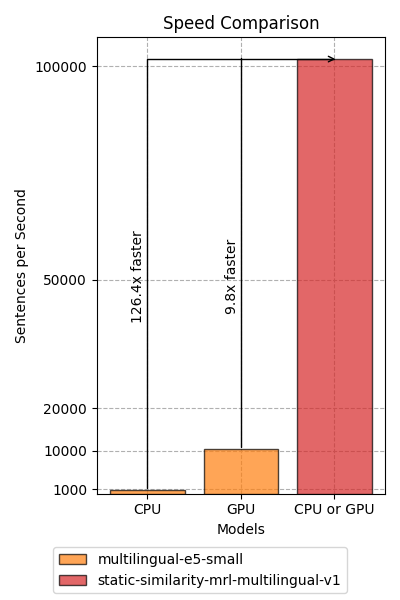

* **100x to 400x faster:** On CPU, this model is 100x to 400x faster than common options like [multilingual-e5-small](https://huggingface.co/intfloat/multilingual-e5-small). On GPU, it's 10x to 25x faster.

* **Matryoshka:** This model was trained with a [Matryoshka loss](https://huggingface.co/blog/matryoshka), allowing you to truncate the embeddings for faster retrieval at minimal performance costs.

* **Evaluations:** See [Evaluations](#evaluation) for details on performance on NanoBEIR, embedding speed, and Matryoshka dimensionality truncation.

* **Training Script:** See [train.py](train.py) for the training script used to train this model from scratch.

See [`static-retrieval-mrl-en-v1`](https://huggingface.co/sentence-transformers/static-retrieval-mrl-en-v1) for an English static embedding model that has been finetuned specifically for retrieval tasks.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Maximum Sequence Length:** inf tokens

- **Output Dimensionality:** 1024 dimensions

- **Similarity Function:** Cosine Similarity

- **Training Datasets:**

- [wikititles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles)

- [tatoeba](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba)

- [talks](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks)

- [europarl](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl)

- [global_voices](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices)

- [muse](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse)

- [wikimatrix](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix)

- [opensubtitles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles)

- [stackexchange](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates)

- [quora](https://huggingface.co/datasets/sentence-transformers/quora-duplicates)

- [wikianswers_duplicates](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates)

- [all_nli](https://huggingface.co/datasets/sentence-transformers/all-nli)

- [simple_wiki](https://huggingface.co/datasets/sentence-transformers/simple-wiki)

- [altlex](https://huggingface.co/datasets/sentence-transformers/altlex)

- [flickr30k_captions](https://huggingface.co/datasets/sentence-transformers/flickr30k-captions)

- [coco_captions](https://huggingface.co/datasets/sentence-transformers/coco-captions)

- [nli_for_simcse](https://huggingface.co/datasets/sentence-transformers/nli-for-simcse)

- [negation](https://huggingface.co/datasets/jinaai/negation-dataset)

- **Languages:** en, multilingual, ar, bg, ca, cs, da, de, el, es, et, fa, fi, fr, gl, gu, he, hi, hu, hy, id, it, ja, ka, ko, ku, lt, lv, mk, mn, mr, ms, my, nb, nl, pl, pt, ro, ru, sk, sl, sq, sr, sv, th, tr, uk, ur, vi, zh, hr

- **License:** apache-2.0

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): StaticEmbedding(

(embedding): EmbeddingBag(105879, 1024, mode='mean')

)

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("tomaarsen/static-similarity-mrl-multilingual-v1")

# Run inference

sentences = [

'It is known for its dry red chili powder .',

'It is popular for dry red chili powder .',

'These monsters will move in large groups .',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 1024]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

This model was trained with Matryoshka loss, allowing this model to be used with lower dimensionalities with minimal performance loss.

Notably, a lower dimensionality allows for much faster downstream tasks, such as clustering or classification. You can specify a lower dimensionality with the `truncate_dim` argument when initializing the Sentence Transformer model:

```python

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("tomaarsen/static-similarity-mrl-multilingual-v1", truncate_dim=256)

embeddings = model.encode([

"I used to hate him.",

"Раньше я ненавидел его."

])

print(embeddings.shape)

# => (2, 256)

```

## Evaluation

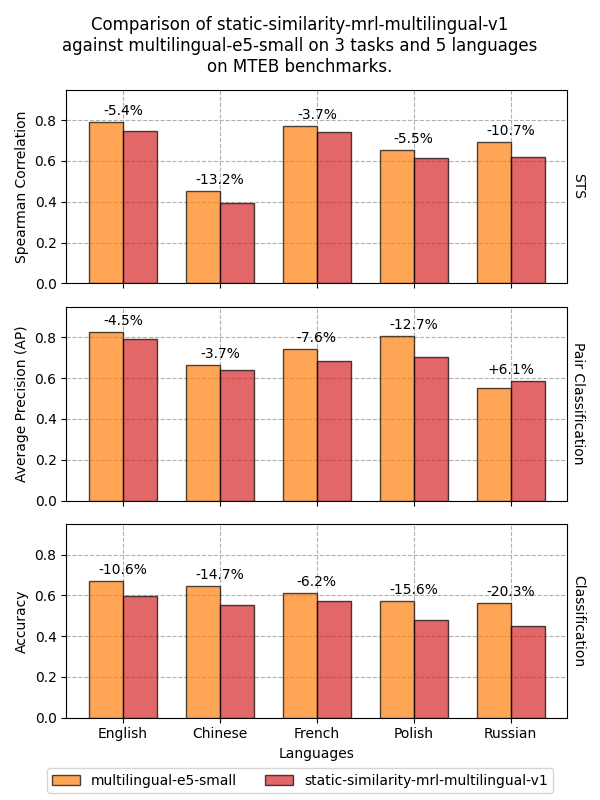

We've evaluated the model on 5 languages which have a lot of benchmarks across various tasks on [MTEB](https://huggingface.co/spaces/mteb/leaderboard).

We want to reiterate that this model is not intended for retrieval use cases. Instead, we evaluate on Semantic Textual Similarity (STS), Classification, and Pair Classification. We compare against the excellent and small [multilingual-e5-small](https://huggingface.co/intfloat/multilingual-e5-small) model.

Across all measured languages, [static-similarity-mrl-multilingual-v1](https://huggingface.co/sentence-transformers/static-similarity-mrl-multilingual-v1) reaches an average **92.3%** for STS, **95.52%** for Pair Classification, and **86.52%** for Classification relative to [multilingual-e5-small](https://huggingface.co/intfloat/multilingual-e5-small).

To make up for this performance reduction, [static-similarity-mrl-multilingual-v1](https://huggingface.co/sentence-transformers/static-similarity-mrl-multilingual-v1) is approximately ~125x faster on CPU and ~10x faster on GPU devices than [multilingual-e5-small](https://huggingface.co/intfloat/multilingual-e5-small). Due to the super-linear nature of attention models, versus the linear nature of static embedding models, the speedup will only grow larger as the number of tokens to encode increases.

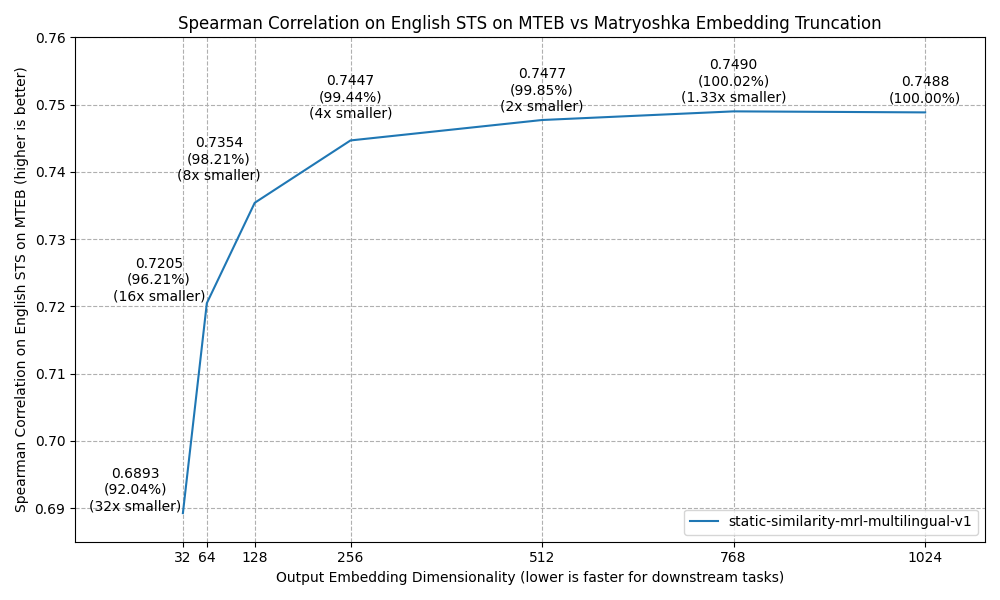

#### Matryoshka Evaluation

Lastly, we experimented with the impacts on English STS on MTEB performance when we did Matryoshka-style dimensionality reduction by truncating the output embeddings to a lower dimensionality.

As you can see, you can easily reduce the dimensionality by 2x or 4x with minor (0.15% or 0.56%) performance hits. If the speed of your downstream task or your storage costs are a bottleneck, this should allow you to alleviate some of those concerns.

## Training Details

### Training Datasets

wikititles

* Dataset: [wikititles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles) at [d92a4d2](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles/tree/d92a4d28a082c3c93563feb92a77de6074bdeb52)

* Size: 14,700,458 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 4 characters

- mean: 18.33 characters

- max: 84 characters

| - min: 4 characters

- mean: 17.19 characters

- max: 109 characters

|

* Samples:

| english | non_english |

|:------------------------|:---------------------------|

| Le Vintrou | Ле-Вентру |

| Greening | Begrünung |

| Warrap | واراب (توضيح) |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

tatoeba

* Dataset: [tatoeba](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba) at [cec1343](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba/tree/cec1343ab5a7a8befe99af4a2d0ca847b6c84743)

* Size: 4,138,956 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 5 characters

- mean: 31.59 characters

- max: 196 characters

| - min: 6 characters

- mean: 30.95 characters

- max: 161 characters

|

* Samples:

| english | non_english |

|:-------------------------------------------------------|:-------------------------------------|

| I used to hate him. | Раньше я ненавидел его. |

| It is nothing less than an insult to her. | それはまさに彼女に対する侮辱だ。 |

| I've apologized, so lay off, OK? | 謝ったんだから、さっきのはチャラにしてよ。 |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

talks

* Dataset: [talks](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks) at [0c70bc6](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks/tree/0c70bc6714efb1df12f8a16b9056e4653563d128)

* Size: 9,750,031 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 5 characters

- mean: 94.41 characters

- max: 493 characters

| - min: 4 characters

- mean: 82.49 characters

- max: 452 characters

|

* Samples:

| english | non_english |

|:------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------|

| (Laughter) EC: But beatbox started here in New York. | (Skratt) EC: Fast beatbox började här i New York. |

| I did not have enough money to buy food, and so to forget my hunger, I started singing." | 食べ物を買うお金もなかった だから 空腹を忘れるために 歌を歌い始めたの」 |

| That is another 25 million barrels a day. | 那时还要增加两千五百万桶的原油。 |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

europarl

* Dataset: [europarl](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl) at [11007ec](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl/tree/11007ecf9c790178a49a4cbd5cfea451a170f2dc)

* Size: 4,990,000 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 0 characters

- mean: 147.77 characters

- max: 668 characters

| - min: 0 characters

- mean: 153.13 characters

- max: 971 characters

|

* Samples:

| english | non_english |

|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| (SK) I would like to stress three key points in relation to this issue. | (SK) Chtěla bych zdůraznit tři klíčové body, které jsou s tímto tématem spojeny. |

| Women have a higher recorded rate of unemployment, especially long term unemployment. | Blandt kvinder registreres større arbejdsløshed, især blandt langtidsarbejdsløse. |

| You will recall that we have occasionally had disagreements over how to interpret Rule 166 of our Rules of Procedure and that certain Members thought that the Presidency was not applying it properly, since it was not giving the floor for points of order that did not refer to the issue that was being debated at that moment. | De husker nok, at vi til tider har været uenige om fortolkningen af artikel 166 i vores forretningsorden, og at nogle af medlemmerne mente, at formanden ikke anvendte den korrekt, eftersom han ikke gav ordet til indlæg til forretningsordenen, når det ikke drejede sig om det spørgsmål, der blev drøftet på det pågældende tidspunkt. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

global_voices

* Dataset: [global_voices](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices) at [4cc20ad](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices/tree/4cc20add371f246bb1559b543f8b0dea178a1803)

* Size: 1,099,099 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 5 characters

- mean: 115.13 characters

- max: 740 characters

| - min: 3 characters

- mean: 119.89 characters

- max: 801 characters

|

* Samples:

| english | non_english |

|:------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------------------|

| Generation 9/11: Cristina Balli (USA) from British Council USA on Vimeo. | Генерација 9/11: Кристина Бали (САД) од Британскиот совет САД на Вимео. |

| Jamaica: Mapping the state of emergency · Global Voices | Jamaica: Mapeando el estado de emergencia |

| It takes more than courage or bravery to do such a... http://fb.me/12T47y0Ml | Θέλει κάτι παραπάνω από κουράγιο ή ανδρεία για να κάνεις κάτι τέτοιο... http://fb.me/12T47y0Ml |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

muse

* Dataset: [muse](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse) at [238c077](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse/tree/238c077ac66070748aaf2ab1e45185b0145b7291)

* Size: 1,368,274 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:---------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 3 characters

- mean: 7.38 characters

- max: 16 characters

| - min: 1 characters

- mean: 7.33 characters

- max: 18 characters

|

* Samples:

| english | non_english |

|:---------------------|:--------------------|

| metro | metrou |

| suggest | 제안 |

| nnw | nno |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

wikimatrix

* Dataset: [wikimatrix](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix) at [74a4cb1](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix/tree/74a4cb15422cdd0c3aacc93593b6cb96a9b9b3a9)

* Size: 9,688,498 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 16 characters

- mean: 124.31 characters

- max: 418 characters

| - min: 11 characters

- mean: 129.99 characters

- max: 485 characters

|

* Samples:

| english | non_english |

|:-------------------------------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------|

| 3) A set of wikis to support collaboration activities and disseminate information about good practices. | 3) Un conjunt de wikis per donar suport a les activitats de col·laboració i difusió d'informació sobre bones pràctiques. |

| Daily cruiseferry services operate to Copenhagen and Frederikshavn in Denmark, and to Kiel in Germany. | Dịch vụ phà du lịch hàng ngày vận hành tới Copenhagen và Frederikshavn tại Đan Mạch, và tới Kiel tại Đức. |

| In late April 1943, Philipp was ordered to report to Hitler's headquarters, where he stayed for most of the next four months. | Sent i april 1943 fick Philipp ordern att rapportera till Hitlers högkvarter, där han stannade i fyra månader. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

opensubtitles

* Dataset: [opensubtitles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles) at [d86a387](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles/tree/d86a387587ab6f2fd9ec7453b2765cec68111c87)

* Size: 4,990,000 training samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 0 characters

- mean: 34.43 characters

- max: 220 characters

| - min: 0 characters

- mean: 26.99 characters

- max: 118 characters

|

* Samples:

| english | non_english |

|:------------------------------------------------------------------------|:---------------------------------------------------------------|

| Would you send a tomato juice, black coffee and a masseur? | هل لك أن ترسل لي عصير طماطم قهوة سوداء.. والمدلك! |

| To hear the angels sing | لكى تسمع غناء الملائكه |

| Brace yourself. | " تمالك نفسك " بريكر |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

stackexchange

* Dataset: [stackexchange](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates) at [1c9657a](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates/tree/1c9657aec12d9e101667bb9593efcc623c4a68ff)

* Size: 250,519 training samples

* Columns: post1 and post2

* Approximate statistics based on the first 1000 samples:

| | post1 | post2 |

|:--------|:--------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 77 characters

- mean: 669.56 characters

- max: 3982 characters

| - min: 81 characters

- mean: 641.44 characters

- max: 4053 characters

|

* Samples:

| post1 | post2 |

|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| New user question about passwords Just got a refurbished computer with Ubuntu as the OS. Have never even heard of the OS and now I'm trying to learn. When I boot the system, it starts up great. But, if I try to navigate around, it requires a password. Is there a trick to finding the initial password? Please advise. | How do I reset a lost administrative password? I'm working on a Ubuntu system, and my client has completely forgotten his administrative password. He doesn't even remember entering one; however it is there. I've tried the suggestions on the website, and I have been unsuccessful in deleting the password so that I can download applets required for running some files. Is there a solution? |

| Reorder a list of string randomly but constant in a period of time I need to reorder a list in a random way but I want to have the same result on a short period of time ... So I have: var list = new String[] { "Angie", "David", "Emily", "James" } var shuffled = list.OrderBy(v => "4a78926c")).ToList(); But I always get the same order ... I could use Guid.NewGuid() but then I would have a different result in a short period of time. How can I do this? | Randomize a List What is the best way to randomize the order of a generic list in C#? I've got a finite set of 75 numbers in a list I would like to assign a random order to, in order to draw them for a lottery type application. |

| Made a mistake on check need help to fix I wrote a check and put the amount in the pay to order spot. Can I just mark it out, put the name in the spot and finish writing the check? | How to correct a mistake made when writing a check? I think I know the answer to this, but I'm not sure, and it's a good question, so I'll ask: What is the accepted/proper way to correct a mistake made on a check? For instance, I imagine that in any given January, some people accidentally date a check in the previous year. Is there a way to correct such a mistake, or must a check be voided (and wasted)? Pointers to definitive information (U.S., Canada, and elsewhere) are helpful. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

quora

* Dataset: [quora](https://huggingface.co/datasets/sentence-transformers/quora-duplicates) at [451a485](https://huggingface.co/datasets/sentence-transformers/quora-duplicates/tree/451a4850bd141edb44ade1b5828c259abd762cdb)

* Size: 101,762 training samples

* Columns: anchor, positive, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 16 characters

- mean: 53.47 characters

- max: 249 characters

| - min: 16 characters

- mean: 52.63 characters

- max: 237 characters

| - min: 14 characters

- mean: 54.67 characters

- max: 292 characters

|

* Samples:

| anchor | positive | negative |

|:------------------------------------------------------|:-------------------------------------------------|:----------------------------------------------------|

| What food should I try in Brazil? | Which foods should I try in Brazil? | What meat should one eat in Argentina? |

| What is the best way to get a threesome? | How does one find a threesome? | How is the experience of a threesome? |

| Whether I do CA or MBA? Which is better? | Which is better CA or MBA? | Which is better CA or IT? |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

wikianswers_duplicates

* Dataset: [wikianswers_duplicates](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates) at [9af6367](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates/tree/9af6367d1ad084daf8a9de9c21bc33fcdc7770d0)

* Size: 9,990,000 training samples

* Columns: anchor and positive

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 14 characters

- mean: 47.39 characters

- max: 151 characters

| - min: 15 characters

- mean: 47.58 characters

- max: 154 characters

|

* Samples:

| anchor | positive |

|:----------------------------------------------------------------------|:-------------------------------------------------------------------------|

| Did Democritus belive matter was continess? | Why did democritus call the smallest pice of matter atomos? |

| Tell you about the most ever done to satisfy a customer? | How do you satisfy your client or customer? |

| How is a chemical element different from a compound? | How is a chemical element different to a compound? |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

all_nli

* Dataset: [all_nli](https://huggingface.co/datasets/sentence-transformers/all-nli) at [d482672](https://huggingface.co/datasets/sentence-transformers/all-nli/tree/d482672c8e74ce18da116f430137434ba2e52fab)

* Size: 557,850 training samples

* Columns: anchor, positive, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 18 characters

- mean: 34.88 characters

- max: 193 characters

| - min: 15 characters

- mean: 46.49 characters

- max: 181 characters

| - min: 16 characters

- mean: 50.47 characters

- max: 204 characters

|

* Samples:

| anchor | positive | negative |

|:---------------------------------------------------------------------------|:-------------------------------------------------|:-----------------------------------------------------------|

| A person on a horse jumps over a broken down airplane. | A person is outdoors, on a horse. | A person is at a diner, ordering an omelette. |

| Children smiling and waving at camera | There are children present | The kids are frowning |

| A boy is jumping on skateboard in the middle of a red bridge. | The boy does a skateboarding trick. | The boy skates down the sidewalk. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

simple_wiki

* Dataset: [simple_wiki](https://huggingface.co/datasets/sentence-transformers/simple-wiki) at [60fd9b4](https://huggingface.co/datasets/sentence-transformers/simple-wiki/tree/60fd9b4680642ace0e2604cc2de44d376df419a7)

* Size: 102,225 training samples

* Columns: text and simplified

* Approximate statistics based on the first 1000 samples:

| | text | simplified |

|:--------|:------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 18 characters

- mean: 149.3 characters

- max: 573 characters

| - min: 16 characters

- mean: 123.58 characters

- max: 576 characters

|

* Samples:

| text | simplified |

|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| The next morning , it had a small CDO and well-defined bands , and the system , either a weak tropical storm or a strong tropical depression , likely reached its peak . | The next morning , it had a small amounts of convection near the center and well-defined bands , and the system , either a weak tropical storm or a strong tropical depression , likely reached its peak . |

| The region of measurable parameter space that corresponds to a regime is very often loosely defined . Examples include `` the superfluid regime '' , `` the steady state regime '' or `` the femtosecond regime '' . | This is common if a regime is threatened by another regime . |

| The Lamborghini Diablo is a high-performance mid-engined sports car that was built by Italian automaker Lamborghini between 1990 and 2001 . | The Lamborghini Diablo is a sport car that was built by Lamborghini from 1990 to 2001 . |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

altlex

* Dataset: [altlex](https://huggingface.co/datasets/sentence-transformers/altlex) at [97eb209](https://huggingface.co/datasets/sentence-transformers/altlex/tree/97eb20963455c361d5a81c107c3596cff9e0cd82)

* Size: 112,696 training samples

* Columns: text and simplified

* Approximate statistics based on the first 1000 samples:

| | text | simplified |

|:--------|:-------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 13 characters

- mean: 131.03 characters

- max: 492 characters

| - min: 13 characters

- mean: 112.41 characters

- max: 492 characters

|

* Samples:

| text | simplified |

|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------|

| Reinforcement and punishment are the core tools of operant conditioning . | Principles of operant conditioning : |

| The Japanese Ministry of Health , Labour and Welfare defines `` hikikomori '' as people who refuse to leave their house and , thus , isolate themselves from society in their homes for a period exceeding six months . | The Japanese Ministry of Health , Labour and Welfare defines hikikomori as people who refuse to leave their house for over six months . |

| It has six rows of black spines and has a pair of long , clubbed spines on the head . | It has a pair of long , clubbed spines on the head . |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

#### flickr30k_captions

* Dataset: [flickr30k_captions](https://huggingface.co/datasets/sentence-transformers/flickr30k-captions) at [0ef0ce3](https://huggingface.co/datasets/sentence-transformers/flickr30k-captions/tree/0ef0ce31492fd8dc161ed483a40d3c4894f9a8c1)

* Size: 158,881 training samples

* Columns: caption1 and caption2

* Approximate statistics based on the first 1000 samples:

| | caption1 | caption2 |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 20 characters

- mean: 63.19 characters

- max: 318 characters

| - min: 13 characters

- mean: 63.65 characters

- max: 205 characters

|

* Samples:

| caption1 | caption2 |

|:--------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------|

| Four women pose for a photograph with a man in a bright yellow suit. | A group of friends get their photo taken with a man in a green suit. |

| A many dressed in army gear walks on the crash walking a brown dog. | A man with army fatigues is walking his dog. |

| Four people are sitting around a kitchen counter while one is drinking from a glass. | A group of people sit around a breakfast bar. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

coco_captions

* Dataset: [coco_captions](https://huggingface.co/datasets/sentence-transformers/coco-captions) at [bd26018](https://huggingface.co/datasets/sentence-transformers/coco-captions/tree/bd2601822b9af9a41656d678ffbd5c80d81e276a)

* Size: 414,010 training samples

* Columns: caption1 and caption2

* Approximate statistics based on the first 1000 samples:

| | caption1 | caption2 |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 30 characters

- mean: 52.57 characters

- max: 151 characters

| - min: 29 characters

- mean: 52.71 characters

- max: 186 characters

|

* Samples:

| caption1 | caption2 |

|:-------------------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|

| THERE ARE FRIENDS ON THE BEACH POSING | A group of people standing together on the beach while holding a woman. |

| a lovely white bathroom with white shower curtain. | A white toilet sitting in a bathroom next to a sink. |

| Two drinking glass on a counter and a man holding a knife looking at something in front of him. | A restaurant employee standing behind two cups on a counter. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

nli_for_simcse

* Dataset: [nli_for_simcse](https://huggingface.co/datasets/sentence-transformers/nli-for-simcse) at [926cae4](https://huggingface.co/datasets/sentence-transformers/nli-for-simcse/tree/926cae4af15a99b5cc2b053212bb52a4b377c418)

* Size: 274,951 training samples

* Columns: anchor, positive, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative |

|:--------|:------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 11 characters

- mean: 87.69 characters

- max: 483 characters

| - min: 7 characters

- mean: 43.85 characters

- max: 244 characters

| - min: 7 characters

- mean: 43.87 characters

- max: 172 characters

|

* Samples:

| anchor | positive | negative |

|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------|:-----------------------------------------------------------|

| A white horse and a rider wearing a ale blue shirt, white pants, and a black helmet are jumping a hurdle. | An equestrian is having a horse jump a hurdle. | A competition is taking place in a kitchen. |

| A group of people in a dome like building. | A gathering inside a building. | Cats are having a party. |

| Home to thousands of sheep and a few scattered farming families, the area is characterized by the stark beauty of bare peaks, rugged fells, and the most remote lakes, combined with challenging, narrow roads. | There are no wide and easy roads going through the area. | There are more humans than sheep in the area. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

negation

* Dataset: [negation](https://huggingface.co/datasets/jinaai/negation-dataset) at [cd02256](https://huggingface.co/datasets/jinaai/negation-dataset/tree/cd02256426cc566d176285a987e5436f1cd01382)

* Size: 10,000 training samples

* Columns: anchor, entailment, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | entailment | negative |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 9 characters

- mean: 65.84 characters

- max: 275 characters

| - min: 7 characters

- mean: 34.06 characters

- max: 167 characters

| - min: 9 characters

- mean: 37.26 characters

- max: 166 characters

|

* Samples:

| anchor | entailment | negative |

|:---------------------------------------------------------------------------------------------------|:------------------------------------------------------------------|:----------------------------------------------------------------------|

| A boy with his hands above his head stands on a cement pillar above the cobblestones. | A boy is standing on a pillar over the cobblestones. | A boy is not standing on a pillar over the cobblestones. |

| The man works hard in his home office. | home based worker works harder | home based worker does not work harder |

| Man in black shirt plays silver electric guitar. | A man plays a silver electric guitar. | A man does not play a silver electric guitar. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

wikititles

* Dataset: [wikititles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles) at [d92a4d2](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikititles/tree/d92a4d28a082c3c93563feb92a77de6074bdeb52)

* Size: 14,700,458 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:----------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 4 characters

- mean: 18.33 characters

- max: 77 characters

| - min: 4 characters

- mean: 17.3 characters

- max: 83 characters

|

* Samples:

| english | non_english |

|:-----------------------------------------------------------------|:-------------------------------------|

| Bjørvika | 比約維卡 |

| Old Mystic, Connecticut | Олд Мистик (Конектикат) |

| Cystic fibrosis transmembrane conductance regulator | CFTR |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

tatoeba

* Dataset: [tatoeba](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba) at [cec1343](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-tatoeba/tree/cec1343ab5a7a8befe99af4a2d0ca847b6c84743)

* Size: 4,138,956 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 5 characters

- mean: 31.83 characters

- max: 235 characters

| - min: 4 characters

- mean: 31.7 characters

- max: 189 characters

|

* Samples:

| english | non_english |

|:-----------------------------------------------------|:-----------------------------------------------------|

| You are not consistent in your actions. | Je bent niet consequent in je handelen. |

| Neither of them seemed old. | Ninguno de ellos lucía viejo. |

| Stand up, please. | Устаните, молим Вас. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

talks

* Dataset: [talks](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks) at [0c70bc6](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-talks/tree/0c70bc6714efb1df12f8a16b9056e4653563d128)

* Size: 9,750,031 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 9 characters

- mean: 94.78 characters

- max: 634 characters

| - min: 4 characters

- mean: 84.61 characters

- max: 596 characters

|

* Samples:

| english | non_english |

|:------------------------------------------------------------------|:-----------------------------------------------------------------------|

| I'm earthed in my essence, and my self is suspended. | Je suis ancrée, et mon moi est temporairement inexistant. |

| It's not back on your shoulder. | Dar nu e înapoi pe umăr. |

| They're usually students who've never seen a desert. | たいていの学生は砂漠を見たこともありません |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

europarl

* Dataset: [europarl](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl) at [11007ec](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-europarl/tree/11007ecf9c790178a49a4cbd5cfea451a170f2dc)

* Size: 10,000 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 0 characters

- mean: 148.52 characters

- max: 1215 characters

| - min: 0 characters

- mean: 154.44 characters

- max: 1316 characters

|

* Samples:

| english | non_english |

|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Mr Schmidt, Mr Trichet, I absolutely cannot go along with these proposals. | Pane Schmidte, pane Trichete, s těmito návrhy nemohu vůbec souhlasit. |

| The Council and Parliament recently adopted the regulation on the Single European Sky, one of the provisions of which was Community membership of Eurocontrol, so that Parliament has already indirectly expressed its views on this matter. | Der Rat und das Parlament haben kürzlich die Verordnung über die Schaffung eines einheitlichen europäischen Luftraums verabschiedet, in der unter anderem die Mitgliedschaft der Gemeinschaft bei Eurocontrol festgelegt ist, so dass das Parlament seine Auffassungen hierzu indirekt bereits dargelegt hat. |

| It was held over from the January part-session until this part-session. | Ihre Behandlung wurde von der Januar-Sitzung auf die jetzige vertagt. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

global_voices

* Dataset: [global_voices](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices) at [4cc20ad](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-global-voices/tree/4cc20add371f246bb1559b543f8b0dea178a1803)

* Size: 1,099,099 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 3 characters

- mean: 115.61 characters

- max: 629 characters

| - min: 3 characters

- mean: 121.61 characters

- max: 664 characters

|

* Samples:

| english | non_english |

|:---------------------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Haiti: Security vs. Relief? · Global Voices | Haïti : Zones rouges, zones vertes - sécurité contre aide humanitaire ? |

| In order to prevent weapon smuggling through tunnels, his forces would have fought and killed Palestinians over a sustained period of time. | Con el fin de impedir el contrabando de armas a través de túneles, sus fuerzas habrían combatido y muerto palestinos durante un largo período de tiempo. |

| Tombstone of Vitalis, an ancient Roman cavalry officer, displayed in front of the Skopje City Museum. | Lápida de Vitalis, un antiguo oficial romano de caballería, exhibida frente al Museo de la Ciudad de Skopje. |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

muse

* Dataset: [muse](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse) at [238c077](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-muse/tree/238c077ac66070748aaf2ab1e45185b0145b7291)

* Size: 1,368,274 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:--------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 3 characters

- mean: 7.5 characters

- max: 17 characters

| - min: 1 characters

- mean: 7.39 characters

- max: 16 characters

|

* Samples:

| english | non_english |

|:-------------------------|:-------------------------|

| generalised | γενικευμένη |

| language | jazyku |

| finalised | финализиран |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

wikimatrix

* Dataset: [wikimatrix](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix) at [74a4cb1](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-wikimatrix/tree/74a4cb15422cdd0c3aacc93593b6cb96a9b9b3a9)

* Size: 9,688,498 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 11 characters

- mean: 122.6 characters

- max: 424 characters

| - min: 10 characters

- mean: 128.09 characters

- max: 579 characters

|

* Samples:

| english | non_english |

|:-------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------|

| Along with the adjacent waters, it was declared a nature reserve in 2002. | Juntament amb les aigües adjacents, va ser declarada reserva natural el 2002. |

| Like her husband, Charlotte was a patron of astronomy. | Stejně jako manžel byla Šarlota patronkou astronomie. |

| Some of the music consists of simple sounds, such as a wind effect heard over the poem "Soon Alaska". | Sommige muziekstukken bevatten eenvoudige geluiden, zoals het geluid van de wind tijdens het gedicht "Soon Alaska". |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

opensubtitles

* Dataset: [opensubtitles](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles) at [d86a387](https://huggingface.co/datasets/sentence-transformers/parallel-sentences-opensubtitles/tree/d86a387587ab6f2fd9ec7453b2765cec68111c87)

* Size: 10,000 evaluation samples

* Columns: english and non_english

* Approximate statistics based on the first 1000 samples:

| | english | non_english |

|:--------|:-----------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 0 characters

- mean: 35.01 characters

- max: 200 characters

| - min: 0 characters

- mean: 27.79 characters

- max: 143 characters

|

* Samples:

| english | non_english |

|:-------------------------------------------|:---------------------------------------|

| - I don't need my medicine. | -لا أحتاج لدوائي |

| The Sovereign... Ah. | (الطاغية)! |

| The other two from your ship. | الإثنان الأخران من سفينتك |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

stackexchange

* Dataset: [stackexchange](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates) at [1c9657a](https://huggingface.co/datasets/sentence-transformers/stackexchange-duplicates/tree/1c9657aec12d9e101667bb9593efcc623c4a68ff)

* Size: 250,519 evaluation samples

* Columns: post1 and post2

* Approximate statistics based on the first 1000 samples:

| | post1 | post2 |

|:--------|:--------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 64 characters

- mean: 669.92 characters

- max: 4103 characters

| - min: 62 characters

- mean: 644.68 characters

- max: 4121 characters

|

* Samples:

| post1 | post2 |

|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Find the particular solution for this linear ODE $y' '-2y'+5y=e^x \cos2x$. Find the particular solution for this linear ODE :$y' '-2y'+5y=e^x \cos2x$. How can I use Undetermined coefficients method ? | Particular solution of $y''-4y'+5y = 4e^{2x} (\sin x)$ How do I find the particular solution of this second order inhomogenous differential equation? (Using undetermined coefficients). $y''-4y'+5y = 4e^{2x} (\sin x)$ I can find the generel homogenous solutions but I need help for the particular. |

| Unbounded sequence has an divergent subsequence Show that if $(x_n)$ is unbounded, then there exists a subsequence $(x_{n_k})$ such that $\lim 1/(x_{n_k}) =0.$ I was thinking that $(x_n)$ is a subsequence of itself. WLOG, suppose $(x_n)$ does not have an upper bound. By Algebraic Limit Theorem, $\lim 1/(x_{n_k}) =0.$ Is there any flaws in my proof? | Given the sequence $(x_n)$ is unbounded, show that there exist a subsequence $(x_{n_k})$ such that $\lim(1/x_n)=0$. Given the sequence $(x_n)$ is unbounded, show that there exist a subsequence $(x_{n_k})$ such that $\lim(1/x_{n_k})=0$. I guess I have to prove that $(x_{n_k})$ diverge, but I don't know how to carry on. Thanks. |

| "The problem is who can we get to replace her" vs. "The problem is who we can get to replace her" "The problem is who can we get to replace her" vs. "The problem is who we can get to replace her" Which one is correct and why? | Changing subject and verb positions in statements and questions We always change subject and verb positions in whenever we want to ask a question such as "What is your name?". But when it comes to statements like the following, which form is correct? I don't understand what are you talking about. I don't understand what you are talking about. Another example Do you know what time is it? Do you know what time it is? Another example Do you care how do I feel about this? Do you care how I feel about this? |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

quora

* Dataset: [quora](https://huggingface.co/datasets/sentence-transformers/quora-duplicates) at [451a485](https://huggingface.co/datasets/sentence-transformers/quora-duplicates/tree/451a4850bd141edb44ade1b5828c259abd762cdb)

* Size: 101,762 evaluation samples

* Columns: anchor, positive, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 15 characters

- mean: 52.48 characters

- max: 164 characters

| - min: 12 characters

- mean: 52.86 characters

- max: 162 characters

| - min: 12 characters

- mean: 56.18 characters

- max: 298 characters

|

* Samples:

| anchor | positive | negative |

|:------------------------------------------------------|:-----------------------------------------------------------|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Is pornography an art? | Can pornography be art? | Does pornography involve the objectification of women? |

| How can I improve my speaking in public? | How can I improve my public speaking ability? | How do I improve my vocabulary and English speaking skills? I am a 22 year old software engineer and come from a Telugu medium background. I am able to write well, but my speaking skills are poor. |

| How do I develop better people skills? | How can I get better people skills? | How do I get better at Minecraft? |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

wikianswers_duplicates

* Dataset: [wikianswers_duplicates](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates) at [9af6367](https://huggingface.co/datasets/sentence-transformers/wikianswers-duplicates/tree/9af6367d1ad084daf8a9de9c21bc33fcdc7770d0)

* Size: 10,000 evaluation samples

* Columns: anchor and positive

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string |

| details | - min: 14 characters

- mean: 47.88 characters

- max: 145 characters

| - min: 15 characters

- mean: 47.76 characters

- max: 201 characters

|

* Samples:

| anchor | positive |

|:--------------------------------------------------------------------------|:-------------------------------------------------------------|

| Can you get pregnant if tubes are clamped? | How long can your fallopian tubes stay clamped? |

| Is there any object that are triangular prism? | Is a trapezium the same as a triangular prism? |

| Where is the neutral switch located on a 2000 ford explorer? | Ford f150 1996 safety switch? |

* Loss: [MatryoshkaLoss](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

1024,

512,

256,

128,

64,

32

],

"matryoshka_weights": [

1,

1,

1,

1,

1,

1

],

"n_dims_per_step": -1

}

```

all_nli

* Dataset: [all_nli](https://huggingface.co/datasets/sentence-transformers/all-nli) at [d482672](https://huggingface.co/datasets/sentence-transformers/all-nli/tree/d482672c8e74ce18da116f430137434ba2e52fab)

* Size: 6,584 evaluation samples

* Columns: anchor, positive, and negative

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative |

|:--------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------|

| type | string | string | string |

| details | - min: 15 characters

- mean: 72.82 characters

- max: 300 characters

| - min: 12 characters

- mean: 34.11 characters

- max: 126 characters

| - min: 11 characters

- mean: 36.38 characters

- max: 121 characters

|

* Samples:

| anchor | positive | negative |