FalCodecompiler

Introduction of Falcon3-decompile-7b

Falcon3-decompiler-7b aims to decompile x86 ghidra decompilation into more readable C.

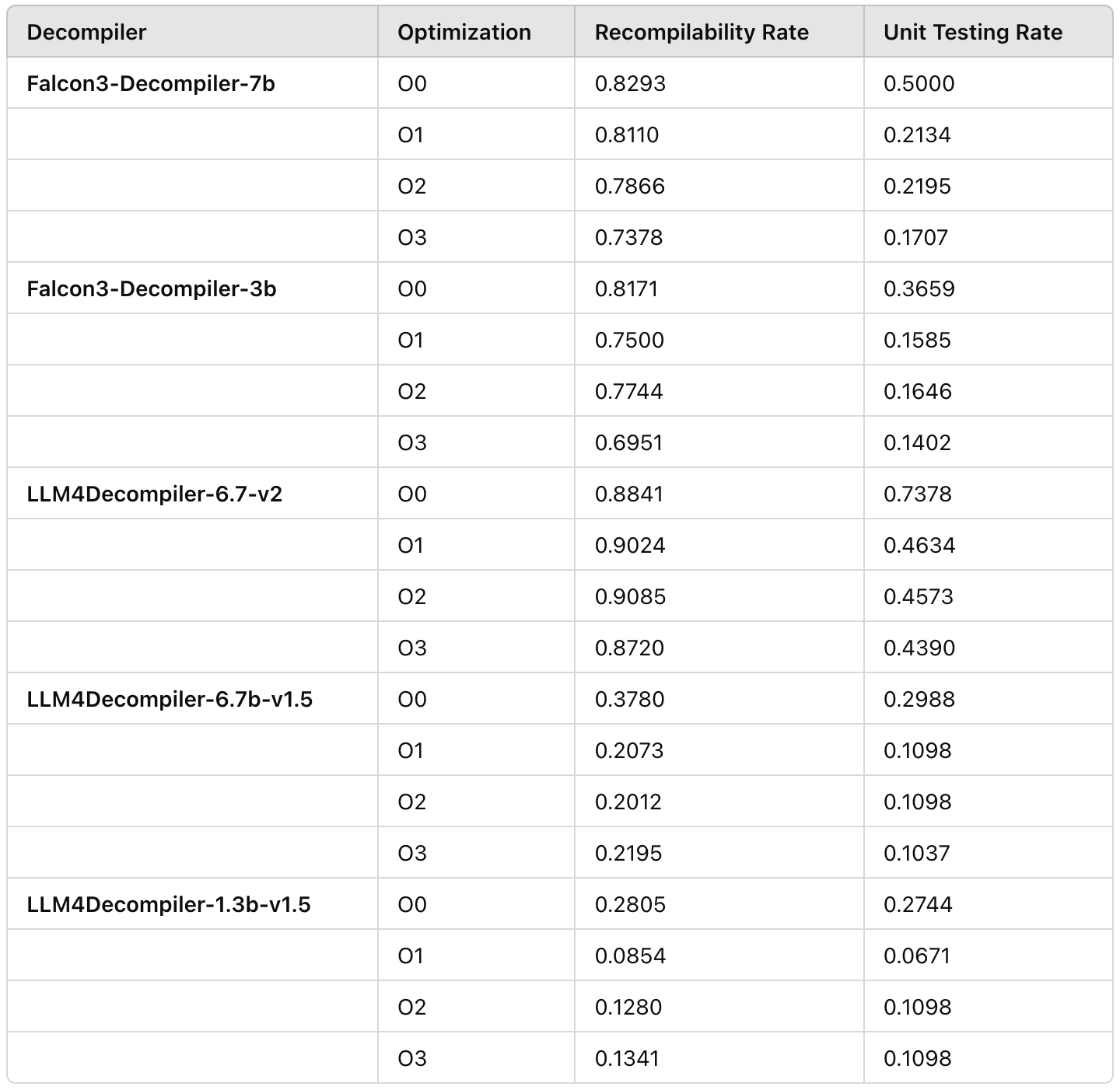

Evaluation Results

The benchmark that have been used is HumanEval benchmark from LLM4Decompile

How to Use

Here is an example of how to use our model Note: Replace asm_func with the function that you want to decompile

Decompilation: Use falcon3-decompiler-7b to translate ghidra decompilation output to more readable code:

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

import os

model_path = 'Neo111x/falcon3-decompiler-7b-v1' # V1.5 Model

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path,torch_dtype=torch.bfloat16)

asm_func = """

char * func0(char **param_1,int param_2)

{

char **ppcVar1;

char *__s;

size_t sVar2;

int iVar3;

char *pcVar4;

pcVar4 = "";

if (0 < param_2) {

iVar3 = 0;

ppcVar1 = param_1 + (ulong)(param_2 - 1) + 1;

do {

__s = *param_1;

sVar2 = strlen(__s);

if (iVar3 < (int)sVar2) {

pcVar4 = __s;

iVar3 = (int)sVar2;

}

param_1 = param_1 + 1;

} while (param_1 != ppcVar1);

}

return pcVar4;

}

"""

before = f"# This is the assembly code:\n"#prompt

after = "\n# What is the source code?\n"#prompt

asm_func = before+asm_func.strip()+after

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path, torch_dtype="auto", device_map="auto")

inputs = tokenizer(asm_func, return_tensors="pt")

with torch.no_grad():

outputs = model.generate(**inputs, max_new_tokens=2048)### max length to 4096, max new tokens should be below the range

c_func_decompile = tokenizer.decode(outputs[0][len(inputs[0]):-1])

# Note only decompile one function, where the original file may contain multiple functions

print(f'decompiled function:\n{c_func_decompile}')

Contact

If you have any questions, please raise an issue.