ViTGaze 👀

Gaze Following with Interaction Features in Vision Transformers

Yuehao Song1 , Xinggang Wang1,✉️ , Jingfeng Yao1 , Wenyu Liu1 , Jinglin Zhang2 , Xiangmin Xu3

1 Huazhong University of Science and Technology, 2 Shandong University, 3 South China University of Technology

(✉️ corresponding author)

Accepted by Visual Intelligence (Paper)

News

Nov. 21th, 2024: ViTGaze is accepted by Visual Intelligence! 🎉Mar. 25th, 2024: We release an initial version of ViTGaze.Mar. 19th, 2024: We released our paper on Arxiv. Code/Models are coming soon. Please stay tuned! ☕️

Introduction

Plain Vision Transformer could also do gaze following with the simple ViTGaze framework!

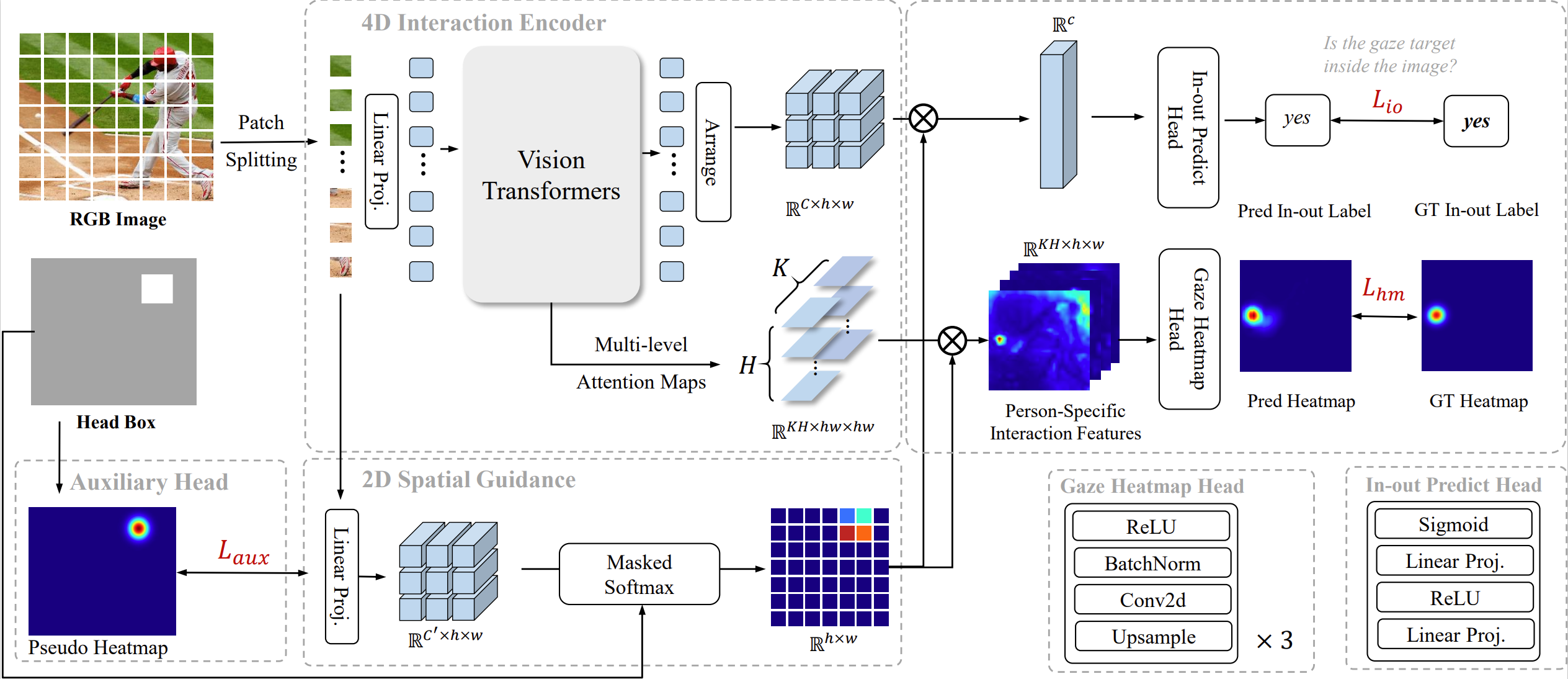

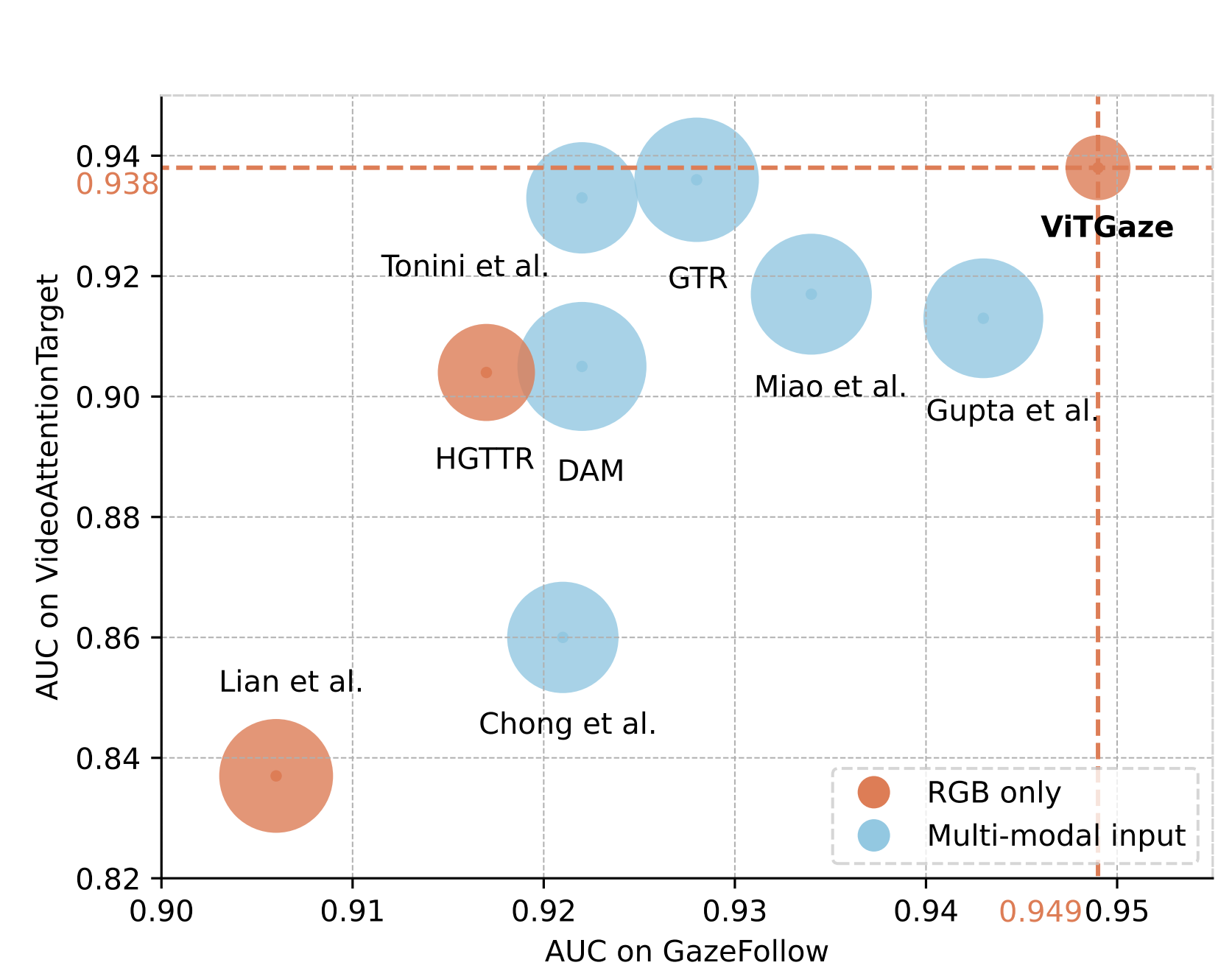

Inspired by the remarkable success of pre-trained plain Vision Transformers (ViTs), we introduce a novel single-modality gaze following framework, ViTGaze. In contrast to previous methods, it creates a brand new gaze following framework based mainly on powerful encoders (relative decoder parameter less than 1%). Our principal insight lies in that the inter-token interactions within self-attention can be transferred to interactions between humans and scenes. Our method achieves state-of-the-art (SOTA) performance among all single-modality methods (3.4% improvement on AUC, 5.1% improvement on AP) and very comparable performance against multi-modality methods with 59% number of parameters less.

Results

Results from the ViTGaze paper

| Results on GazeFollow | Results on VideoAttentionTarget | ||||

|---|---|---|---|---|---|

| AUC | Avg. Dist. | Min. Dist. | AUC | Dist. | AP |

| 0.949 | 0.105 | 0.047 | 0.938 | 0.102 | 0.905 |

Corresponding checkpoints are released:

- GazeFollow: GoogleDrive

- VideoAttentionTarget: GoogleDrive

Getting Started

Acknowledgements

ViTGaze is based on detectron2. We use the efficient multi-head attention implemented in the xFormers library.

Citation

If you find ViTGaze is useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@article{song2024vitgaze,

title = {ViTGaze: Gaze Following with Interaction Features in Vision Transformers},

author = {Song, Yuehao and Wang, Xinggang and Yao, Jingfeng and Liu, Wenyu and Zhang, Jinglin and Xu, Xiangmin},

journal = {Visual Intelligence},

volume = {2},

number = {31},

year = {2024},

url = {https://doi.org/10.1007/s44267-024-00064-9}

}

Model tree for yhsong/ViTGaze

Base model

facebook/dinov2-small